Some are enthralled by generative AI, and advocate a future defined by it. Some fear it will rot our society or even destroy us all. And many loathe it, refuse to even call it "AI,” and do not conceal their contempt for both the believers and the frightened. What’s more, all of these attitudes are moving from ‘personal beliefs’ to ‘pervasive unquestioned assumptions,’ becoming the water in which entire communities swim.

I have an unusual perspective here; I have a weird life that spans all three groups. I’ve attended “AI safety” dinners with boldface-in-that-world names like Paul Christiano and Ajeya Cotra, representing Metaculus, which cites AI risk as a focus area. I live in the world of authors and artists (as a reasonably successful novelist) … almost all of whom, even the Science Fiction Writers Association, seem firmly in the ‘loathe’ camp, joined by many/most political ‘progressives,’ another cohort in which I count myself.

I’m here to say that all of them are wrong. Of course I’m an AI believer myself—I’ve co-founded an AI startup after all. But it was a very conscious decision, in the face of the fear and loathing of my other worlds. I’m writing this piece to itemize the many reasons why the fear and loathing are wrong … and to have a URL I can link to / metaphorical sign to tap when the subject comes up.

What follows takes the form of the great Maciej Ceglowski’s Superintelligence: The Idea That Eats Smart People, partly because it already covers much of the AI-will-kill-us-all stuff (now so overdiscussed it has somehow managed to make an ‘existential risk to humanity’ actually boring), partly because it’s fun. So, sans further ado, here’s why/how to stop worrying and neither fear nor loathe AI:

The Argument Against Nomenclature

This honestly seems incredibly silly to me, and yet it seems to upset so many so much. “How can you call it AI?! It’s not really intelligent!” Whenever asked to define ‘real’ intelligence, though, it almost invariably turns out that the critic in question is fundamentally confusing intelligence and consciousness.

No one is claiming GPT-4 is conscious. But it’s a running joke in the field that whenever a computer achieves anything related to intelligence, ‘artificial intelligence’ is immediately and angrily redefined to exclude that new achievement. Imagine traveling back in time twenty years and asking an ordinary person “If an advanced future computer system could, in response to a sentence or two, write poetry / record songs / create art / generate movies, extemporize detailed answers and conversations in any language, write software, diagnose medical conditions, pass bar exams, and basically act as a general-purpose amanuensis, would you call that at least some kind of artificial intelligence?” How do you think they would answer? Be honest now. Yeah.

The Argument Against Illegality

Lawyers generally have a nuanced view of generative AI. Many furious non-lawyers, however, are convinced that its very existence is theft, that “it’s trained on scraped copyrighted data, therefore it is clearly illegal!”

I hold many copyrights on writing, mostly fiction, which have over the years earned me roughly half a million dollars, so you might expect me to be sympathetic. I am not. I am no lawyer but I know this is nonsense. First, if generative AI was obvious theft, people would have sought injunctive relief to, y’know, shut down ChatGPT. They haven’t done so because its actual legal status is currently, at worst, in flux.

Copyright is fundamentally about the right to copy—it’s right there in the name!—and LLMs are not actually used to make copies of their training data. (Yes, the New York Times sued OpenAI on those grounds, but come on, in the real world the only people who have ever used ChatGPT to try to recreate existing NYT articles are the people who filed that suit.) It’s true that there are some good arguments that generative AI is not transformative ‘fair use.’ But it’s also true that there are very strong arguments that it is, and it’s annoyingly disingenuous for either side to pretend otherwise.

This is what happens with significant new technologies! They fit existing laws awkwardly, at best, so new laws are needed. I don’t know exactly what those laws will be, or how various supreme courts will eventually rule on fair use and derivative works; but the claim that “generative AI is built on theft” is patently legally ridiculous.

The Argument Against Immorality

When pressed, the “it’s clearly theft!” people will usually concede that they don’t actually mean it’s illegal per se(!), and (often after a side rant about how the corrupt courts are basically for sale in these days of late capitalism) go on to explain that they mean it’s immoral for rich tech companies to profit from the copyrighted work of artists and writers.

This is a deeply incoherent argument — after all, copyright is a legal not moral term, there was no stone tablet brought down from Mount Sinai on which was written “Thou Shalt Not Make Copies Until After The Life Of The Artist Plus 75 Years Because Disney, Amirite? :/” — but a stronger one nonetheless. The point of copyright law is to ensure that both creators and society benefit from their creations. Artists and writers should, morally, be compensated for derivative works. But it’s a stretch beyond even the capabilities of Elastigirl to claim that any particular output of a large language model is a “derivative work” of any writer, except maybe in a handful of weird edge cases. The oceans of training data — 15 trillion tokens is roughly 100,000,000 book-length works! — are far too immense to attribute anything they wash up to individual droplets.

The notion that it’s morally wrong for AI to be trained on creators’ work — to learn from it, rather than copy it — makes even less sense to me. It sounds akin to saying that authors should be allowed to forbid selected people, or selected companies, not just from copying their work but from ever reading it. That … is not the path of moral righteousness. Once your work is out there in the world, it’s there for anyone to encounter and learn from. Authors are not paid royalties for books they influenced, nor should they be. And note again the infinitesimal scale that we’re talking about. I’ve published more than a million words of paid fiction and journalism, and my ‘influence’ on a model trained on 15 trillion tokens which include my work would be on the order of 1/10,000,000, beyond negligible, less than the ‘influence’ that a single resident of New York City has on the pulsating life of the entire city. There is a point at which any sense of individual contribution breaks down, and AI is way past it.

Building a collective corpus of knowledge that anyone can learn from is what culture is for. If you want OpenAI to buy a single copy of every book in print to train GPT-5 on them, sure, I agree … but do the math; this will make such a ridiculously trivial difference to both their costs and writers’ incomes it’s barely worth mentioning. And that requirement would not be at all the same as trying to forbid anyone, or any company, from ever reading / looking at / learning from a published work.

However. Image generation, via diffusion models rather than LLMs (most AI critics don’t really understand that there’s a difference…) can be another story. I agree that AI outputs which are clearly the derivative work of an individual living creator should be licensed, and in the absence of licenses, are wrong. In practice, this means images generated “in the style of” distinctive artists. Again, this is why we need new laws! (I have previously proposed some to handle just this case.) But, also/again, this is how new tools and technologies work! They can be used for good and ill, but the ill does not invalidate the good.

I agree that there absolutely are some objectionable AI outputs. But analogizing these early days of AI to the early days of photography, objecting to training isn’t like saying that photographers shouldn’t sell copies of photos of paintings; it’s like saying it’s morally wrong to ever photograph paintings at all. The day cameras were invented, they could be used for immoral purposes such as revenge porn. But that doesn’t mean that cameras and photography are wrong and evil.

…What’s that? Um. No. No, not even if you really, really don’t like photographers.

The Argument Against Techbros

Often the real anti-AI rage, the target and taproot of the loathing, is not generative AI per se, but rather its creators. Over the last decade, ‘techbros’—cartoonish figures of ignorance, hubris, and often malevolence—have become become culture’s villains of choice. (There are too many examples to cite.) I think there are three root causes:

The tech industry has become both enormously wealthy and a powerful engine of change affecting everyone on the planet—arguably the only effective engine of change in the West, where governments are paralyzed, and traditional industries (see e.g. Boeing) are sclerotic—and with that comes more and stricter scrutiny than in the days of nerds in garages who occasionally got rich.

People do not understand how the tech industry works. The whole point of the startup ecosystem is to try a zillion experiments, many of which sound and/or are stupid or awful, and fail. We do this because we have learned that occasionally, experiments which sound stupid/awful … turn into tsunamis of the future.

The vengeful fury of journalism.

I should probably pause here to say that much of the criticism of the tech industry and its missteps is entirely valid, or at least contains kernels of truth. Lord knows I’ve penned plenty of such criticism myself. Venture capital can lead to perverse incentives. Services do tend to get worse and greedier over time (though, as Boeing shows, this is hardly limited to tech.) The industry has attracted more people driven by the desire to get rich rather than the need to build — siphon, not forge, people.

(It’s also true that people post anti-tech-industry screeds to Discord servers from their iPhones while riding in Model 3s driven by Lyft drivers; that their real complaint is often capitalism itself, nothing to do with tech; and some is just classic tall-poppy syndrome. But I don’t think either this paragraph or the last actually represents the anti-techbro zeitgeist.)

Hedge fund titans objectively siphon out far more from society while contributing less, yet don’t provoke anything like the flamethrower ire aimed at ‘techbros.’ Raging against the one remaining engine of change, in large part because of failure to understand that experiments with apparently dumb ideas are fundamental to what it does, while the finance industry remains a giant vampire squid and the government a paralyzed dinosaur … seems misguided …

But not surprising, because journalism remains an immensely powerful force (which is good! mostly), quietly setting the temperature of the water in which we all swim, and journalism has an enormous grievance against the tech industry.

Note I don’t say “journalists.” I don’t mean on the individual level. I’m not saying there’s any conscious collusion. But people, including journalists, react in fairly predictable and and understandable ways to provocations and incentives, and, well:

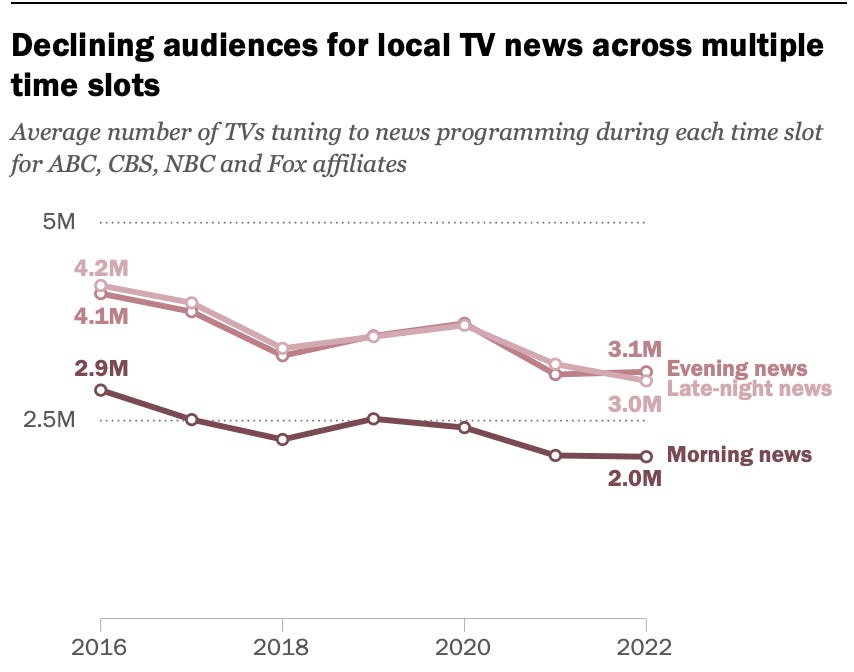

”Employment prospects have dwindled for people working in the journalism field or hoping to break into it. Our projections show that newsroom jobs—including news anchor, reporter, radio news anchor, staff writer, and television news reporter positions—will decline by roughly 3 percent from 2022 to 2031, adding to the long-term decline in journalism jobs. By 2031, the number of journalism jobs will have fallen by nearly 35 percent since 2002” (cite)

Journalism used to be a secure, prestigious, even lucrative industry. Now look at it. What happened? The tech industry, is what happened. Craigslist ate classifieds. Google ate advertising. Social media ate control over distribution. Journalists have seen their entire industry, a force for good in which they believed, which gave them meaning, absolutely gutted by the tech industry. No wonder they tend to be a tiny bit biased against it! …And no wonder they’ve subtly, collectively, usually unconsciously, turned the cultural temperature up towards “boiling rage.”

I understand and sympathize with this all too human reaction. But because it’s such an understandable human reaction, therefore we can conclude that journalism has been heavily slanted against the tech industry over the last decade. In a more hypothetically objective world, ‘evil ignorant techbros’ would be viewed as occasionally-existent-but-not-representative edge cases amid a vast industry of many millions of people … rather than, y’know, its defining avatars … and tech’s latest remarkable advancement would be greeted with much less knee-jerk contempt.

The Argument Against Irrelevance

A more nuanced argument against modern AI, which you hear from people like Molly White and Ed Zitron—smart people who I respect—is that it’s a hype bubble for tech that isn’t actually good for anything, or anyway isn’t net good, and is ultimately not going to be a particularly relevant technology, except for ruining the web with AI-generated crap.

Of the two cites above, White is far more laudatory than Zitron…because she’s a coder. “It’s irrelevant hype!” is the argument that engineers find most baffling, because AI is already an extremely relevant and transformative technology for us, now, today. GitHub Copilot writes like 20% of my code these days. Not stuff that requires special analysis or synthesis, no … but it does write code that’s quite finicky, and/or requires solid understanding of a particular framework, fluently and well. I have looked at Copilot suggestions and muttered, impressed, to an empty room, “OK, that’s creepy.” Nowadays I often just wait for Copilot to fill in what I want rather than check the exact syntax I need.

Now, I have deep experience of dozens of languages and frameworks, and can sanity-check everything it says. But on the other hand, ChatGPT is enormously useful for more junior engineers. Studies consistently show that it helps junior people more than senior people. I have had junior colleagues who were brilliant but didn’t have the depth of knowledge that senior engineers accumulate; GPT-as-amanuensis was a huge game-changer for them.

It’s true that many other things that generative AI can do today are more cool or interesting than obviously productive. I’ve written about this myself; LLMs are agents of chaos. It’s also true that AI-generated content is choking our Internet … or, more precisely, our search engines and social media … but when you step back and think about what we might be transitioning away from, was that advertising-driven SEO-orchestrated social-media-distributed internet really such a wondrous utopia? I seem to recall rather a lot of criticism of it even before generative AI. I think a move back to a more curated, less SEO-driven internet will be a good thing.

None of these are why we believers are so convinced that AI is the New Big(gest) Thing though. That reason is simple: the trajectory. GPT-3 was a giant leap from GPT-2; GPT-4 was a huge bound further; now we have open-source-ish GPT-4 equivalents, and strong indicators that AI will keep getting much better for a while yet. (There may be a data wall, but not soon.)

To a very crude approximation, GPT-3 was like a high-school intern, GPT-4 more like a college intern. Molly White echoes that: “they are handy in the same way that it might occasionally be useful to delegate some tasks to an inexperienced and sometimes sloppy intern.” That is true, but:

it turns out there are many ways to automatically correct for the inexperience and sloppiness! Doing so is, in fact, modern AI engineering.

you can conjure up an arbitrary number of interns, and we expect them to keep getting better every year for quite some time.

…It seems unlikely, he understated, that an information workforce of arbitrary size that keeps getting more capable will be a social and economic irrelevance.

The Argument Against Hallucinations

If there is one thing the general public knows about ChatGPT, it’s that it hallucinates. (A term coined in passing by the great Andrej Karpathy, incidentally, rather than some industry plot to anthropomorphize the technology, a theory I have actually heard.) Which is often true! …But.

(First, as an aside, the free version of ChatGPT is so bad that I’m sort of embarrassed on OpenAI’s behalf that it still exists. I have known several people who were rightfully contemptuous of modern AI because that was their example of it … who reconsidered rapidly after playing around with GPT-4.)

Very few—virtually zero—industrial uses of AI involve simply asking models questions. The fact that they can answer arbitrary questions, sometimes correctly, sometimes not, is what the general public thinks they’re for. But in fact that capability is, to those of us who build with it, an interesting but not especially relevant side effect of their training.

Instead we use them as what Rohit Krishnan calls “anything-from-anything machines” — to transform information. From source code to suggestions. From historical data to predictions of the future. From huge unstructured mishmashes of raw data to polished reports that highlight the important needles in those haystacks. From device manuals to answers to questions about those devices. Note that the last example is not the same thing as an AI answering questions about its training data— because we feed the model relevant bits of the manuals along with the question, and have it transform that data into an answer.

When you use modern AI models as anything-from-anything machines, and you feed them good data, and don’t try to force their answers into a format they can’t manage from that data, they don’t hallucinate. I won’t say they’re always right, mind you. Again, they’re about on par with (an arbitrary number of) college interns. But they consistently generate good outputs. The general public is always amazed to hear this…

The Argument Against Unemployment

“Tech destroys jobs!” is an old and tired argument, and not even an argument against technology itself. No one would prefer a world of elevator operators, telephone operators, hand looms, and horse-drawn carriages. This argument is not with technology but how technological transitions are managed. Or with what people call ‘capitalism,’ in quotes because many Americans do not realize that capitalism and capitalism as practiced in America are distinct things. In a perfect world, technological transitions would be managed by active and thoughtful governments! If that seems like too much to ask … maybe that’s not actually the tech industry’s fault.

The argument against AI is slightly more interesting: “AI destroys the jobs that people actually want to do!” I’ve written (very speculatively) about a version of this myself. And it’s true that, now that anyone can get any illustration they can describe, on the spot, the demand for illustrators will plummet soon if it hasn’t.

But that’s still the transition problem … and doesn’t making desirable jobs available to anyone, for (almost) free, actually mean you’ve spread a good thing vastly more widely? I’m certainly not suggesting that everyone’s an artist now. But everyone, from kids to senior citizens, can now play with art —with songs, or sonnets, or videos—in ways that were previously unavailable. I agree we should make this transition better for those it’s hard on. But do people really think we going to look back on a time when the ability to play with these engines of creation was not widely available, and think “oh, life was so much richer then”?! I’m a bit staggered by that apparent belief.

The Argument Against Climate Doom

“OK fine,” some say, “AI might be useful, but it uses so much energy, during a climate crisis! That’s obviously bad, right?”

It’s worth noting that many such arguments seem to be in hilariously bad faith. An estimate that the training GPT-3 emitted 550 tons of CO2 leads to the conclusion “Training AI models generates massive amounts of carbon dioxide.” Massive! 550 tons is roughly as much as that generated by a single transatlantic flight, when aviation is only 3% of global emissions … and yet articles full of grave concern about the climate consequences are not published every time another long-haul flight takes off, the way they seem to be each time a new model is trained. It’s almost as if we realize that those individual flights don’t really move the needle.

More generally, though, this does highlight a genuine worldview chasm between the tech mindset and the modern progressive mindset. The latter group has internalized scarcity: that because of the climate crisis, we must reduce our energy use in any way possible, to save the planet and ourselves.

The tech mindset is that the progressive mindset is staggeringly ignorant — because, somehow without the median person noticing, we now live in a world of clean energy abundance, and moreover, this abundance is growing exponentially.

Between 2000 and 2022, installed solar power doubled every two years, a new Moore’s Law. …And then, in 2023, the growth rate really took off. Replacing existing coal plants with solar or wind power is now cheaper than simply keeping those same coal plants running. Yes, cheaper. Yes, really.

The climate crisis is real and we need to reduce the emissions of agriculture, concrete, steel, internal combustion engines, etc., while moving from fossil fuels to renewable energy. These things are true. But at the same time, clean energy is not scarce. In fact it is abundant, and growing moreso every year. Essentially all big tech companies, and hence all AI models, use clean energy. And the abundance mindset says, correctly, that whether you throw up a couple more solar and wind farms to power AI models, or not, is not going to perceptibly move the climate-change needle either way … unless of course AI ultimately helps us accelerate our transition away from fossil fuels.

The Argument Against Skynet Doom

I am so tired of this argument, and I have argued it before, so I’m just going to boil it down to my simplest and yet most potent objection. Dear AI doomer: I grew up in the shadow of nuclear doom, and there was nothing hypothetical about those thousands of warheads, and yet here we all are. I concede that your anxieties were briefly interesting, but now they bore me to tears. So please go away … and come back and wake me up when your concerns are no longer entirely, completely, 100% hypothetical.

…In the meantime, I expect to sleep deeply and well.

The Argument For Arbitrage

“Pessimists sound smart. Optimists make money.” — Nat Friedman

Consider the four options:

if you’re an AI pessimist, and you’re wrong, you’re letting a generational opportunity, the technology that will define the future, pass you by in its relatively early days when it’s still relatively accessible. Oops. Ugh.

If you’re an AI pessimist, and you’re right, you will get to sound smart, but also be annoyed by how many people will ride the AI bubble to success before it pops.

If you’re an AI optimist, and you’re wrong, you will feel foolish, but pay only the standard opportunity cost for being wrong; you aren’t, like, consciously turning your back on a once-in-a-generation opportunity.

If you’re an AI optimist, and you’re right—look, just be magnanimous, OK?