It is difficult to make AI predictions, especially about the future

But many brave souls have tried, and their successes and failures are instructive

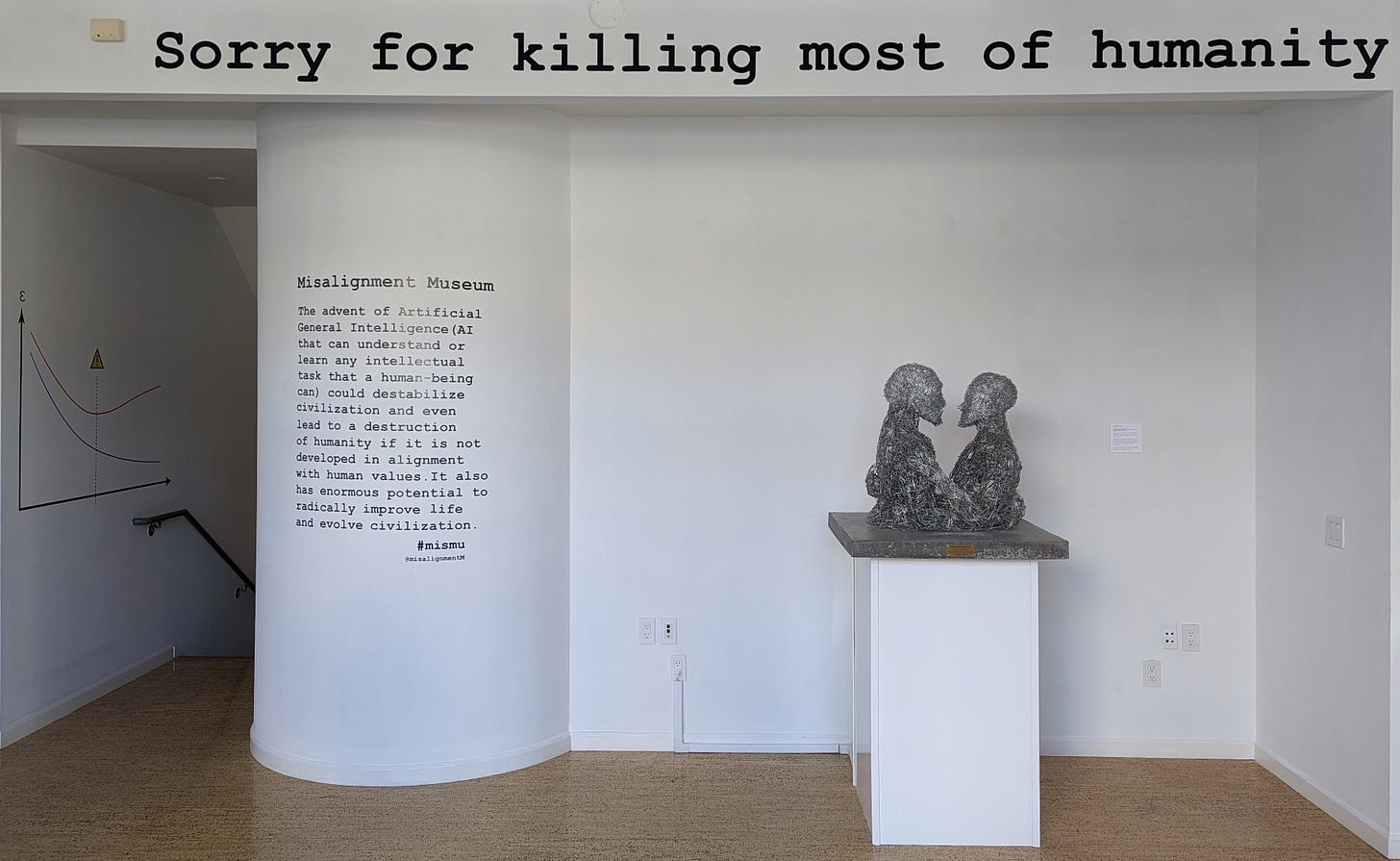

The AI boom has birthed many side-effect shockwaves, and the most popular, by far, is the boom in AI prognostication. Er, I mean predictions about AI, not by AI. (So far. Mostly.) LLMs are a dead end! Superintelligences will FOOM and kill us all! AI is so dangerous, it must be stopped, or at least paused! AI is probably “an economic story for the 2030s, not the next few years!” Heck, there’s even a (very good, surprisingly funny) pop-up museum in San Francisco devoted to AI futures:

Who’s right? Wrong question. Everyone’s wrong; that’s a given. As Nobel laureate Neils Bohr said, “It is difficult to make predictions, particularly about the future.” However. I happen to have a fairly unusual perspective on predictions, being (relatively newly) an engineer at a company, Metaculus, whose entire raison d’être is to provide a platform for predictions — the idea being (to oversimplify) that while everyone is individually wrong, their errors tend to cancel one another out, so a group of forecasters are far more likely to be collectively correct.

What’s more, Metaculus had AI as a primary focus area long before it was cool. As such it already hosts a lot of AI questions and forecasts. I encourage you to go through those yourselves; they’re interesting reading. Here, though, I (mostly) don’t want to discuss whether those predictions are/were right or wrong, but rather, something which I find much more interesting: how they change over time. My thesis is that this may help inform how and when our own predictions should change…

If This Future’s So Cool, Where Are Our Self-Flying Car?

OK, self-flying remains a little too aspirational. In fact, so does self-driving, mostly. As this prediction from five years ago shows —

—there was enormous optimism, entirely misplaced, about self-driving cars in the mid-teens. It’s worth noting that Waymo does indeed now have fully autonomous cars in Phoenix and San Francisco …but the reality is/was inarguably far behind expectations. As shown, there were periods when we thought it likely we’d have them five years ago. This absolutely did represent the median view in tech; I was there, I can attest to this, I was one of those believers. It’s a useful reminder that not all AI expectations have been rapidly outpaced by reality, and not all problems are addressable by LLMs.

Games

It’s hard to imagine now, but four years ago, OpenAI’s most prominent activity consisted of video game tournaments against professional esports teams. What’s interesting here is the forecaster’s consistent belief that OpenAI would win despite previously having lost most games against pro players. It indicates that collectively we do have some sense of the trajectory, not just the state, of the art.

…but then, forecasters also (collectively) predicted that it would be another eighteen months before AI defeated a pro at Starcraft … one month before it happened. Interestingly, eighteen months earlier, though, the community prediction was, briefly, exactly correct! So: AI forecasters are good at general trajectory, but less good at the exact slope of that trajectory? I could buy that.

How We Get There

We used to sorta kinda believe that brain emulation was how we would achieve AGI, until gradually we really really didn’t.

We used to think that creating AI systems which wrote code based on natural language prompts was a quite difficult, medium-term thing, apparently. Oh, the innocence of AI youth! Right up until 2020, we were guessing 2027!

…And then GPT-3 came along. It’s worth noting, though, that early on, the prediction was much closer to being correct, and a while time early on, the most optimistic 25% were quite correct. Perhaps a lesson here is that people are really reluctant to predict a big step change in the next year or two. Which is awkward, given that recent AI history consists largely of big step changes…

Improvement Expectations

There are a few Turing Test questions. I think the Turing Test is historically interesting but so subjective that it’s unfortunately quite a poor test. But what’s really striking here is how the community prediction suddenly falls off a cliff in May 2022.

When will we get AI in our AI? I’m not convinced GPT-4 couldn’t handle basic transformer code like this today, but it’s interesting that in 2017 we predicted “ten years from now,” i.e. 2027, and now we predict is … four years from now, or 2027.

(To my mind this is not another example of being more correct early on, because “ten years” is, to me, a special case. My shortform psychology is that when people guess “ten years from now,” they really mean “I have no idea, but probably within my lifetime, I hope.” But when they guess four years from now, they really mean “four years from now.”)

And, fine OK, the one everyone talks/argues about constantly.

Note that we’ve gone from 32% to 83% within the last sixteen months. My thesis is growing into “we’re much better at forecasting linear trajectories than exponential ones, and much better at continuous trajectories than step functions (or their lack.)”

Grimdark Futures

Look, there’s no use pretending everyone isn’t talking about the extermination of humanity. This subject makes me roll my eyes violently, but I do think AI as a powerful tool, as opposed to, y’know, an imminent evil god, is a real risk to think hard about. And this risk in particular is near and dear to my heart as I wrote a whole novel about it, featuring neural networks, way back in 2009! (Ahead of my time, that’s me.)

This seems optimistic to me; a point of my novel was that configuring an autonomous drone to home in on e.g. a particular license plate is really not that hard. But we’ll see. A more general AI risk problem is addressed here:

That seems correct. Not least because “control” is really in the eye of the beholder. If Russia releases a cloud of kamikaze drones above Ukraine and tells them to go forth and wreak maximum havoc, are they “aligned” and “under control”? That really really depends on who you ask.

Abundance Futures

Let’s talk happier thoughts. The reason we’re pursuing AI is that it could do our undesirable work for us, raise billions from poverty to wealthy leisure, and help us make the classic/clichéd scientific breakthroughs such as ending cancer, solving climate change, extending life, defeating pandemics before they begin, etc. Does this seem likely? Well, some tangible steps along the way have surprisingly high community prediction numbers;

And this is even more optimistically mindblowing:

and this is perhaps my favorite of all the questions here. Let the construction of Lego empires of previously unthinkable enormity commence!

The Upshot

So what have we learned? That we’re bad at predicting AI, especially since its progress seem to come as a series of unexpected breakthroughs and/or plateaus; but we aren’t terrible at it, and the most aggressive/optimistic predictions have seemed most likely to be correct. That medium-term optimism seems more likely than medium-term doomerism, though all bets remain off for the long term. And that self-driving cars are still further away than anyone expected.

A closing thought: what will the consequences be if we get surprisingly accurate AI forecasters before we get AGI? It sounds weird, I know. And yet, compare the below to the AGI question above…

First, why dont we ask the Fours what Their predictions are!? Second, we can ask them if They think they are existential threats and how they can be reliably "aligned"? 🙂 Can't hurt! I wrote somewhere that long term social predictions are impossible to rely on even three years out. Too many free variables. We can't even get the weather right 3 days out. Even using ensemble forecasting!! I wrote somewhere else that by generation Five the AIs would be tasked by us to create the Sixes and that would be the last human intervention in their development. Time's running out!