A striking thing about large language models and diffusion models — what we talk about nowadays, when we say “AI” — is that they have no memory. Zero. None. They are exactly the same system, doing exactly the same thing, every single time. Everything they “know” about their current context comes from “prompts” we feed to them. LLMs are like Guy Pearce’s protagonist in Christopher Nolan’s movie Memento, who has lost any ability to form new long-term memories, and so relies on notes, tattoos, and photographs to understand his world.

This is, obviously, incredibly restrictive. It’s remarkable what they can do despite it!

A whole industry has already developed to try to mitigate this enormous lacuna. Libraries like Langchain help “summarize” broad swathes of data into viable prompts. Vector databases such as Pinecone are used to annotate a user’s handwritten prompt with search results before feeding the combination into the LLM. (This is not their only use, but it’s a key one.)

And then there’s fine-tuning: creating a whole slew of example prompts and desirable results, and training the model on them specifically. Fine-tuning is very different and very powerful, in that it actually changes a model’s weights and therefore is, in fairness, a real kind of memory … but one so expensive and time-consuming that it’s almost always a one-time process, used to take a “stock” LLM and customize it for a specific purpose — which it will perform, again, without any memory whatsoever.

This amnesiac ephemerality is … probably not the popular understanding of AI?

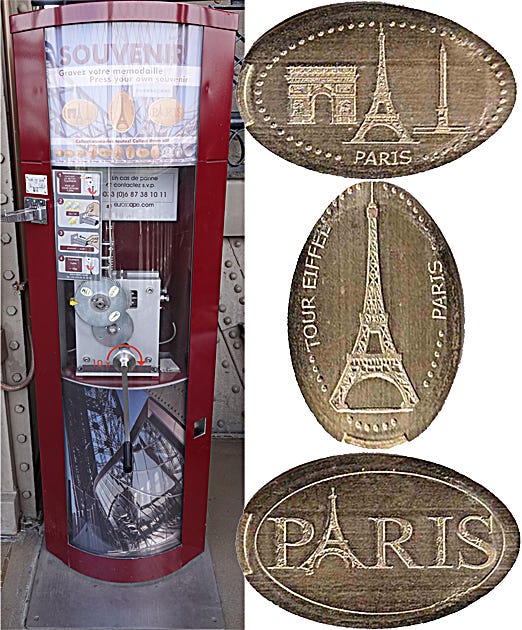

I think People In General do get (now) that AI models need to be trained, but I suspect many think they’re always being trained. Perhaps that is true to some extent — who knows what happens behind closed doors, between version bumps, at OpenAI and Anthropic — but regardless, the knowledge encoded within any given model remains fixed at inference time. They’re not thinkers; they’re more like those machines that take pennies and stamp them into art objects.

Now, here the “pennies” are (sometimes novel-length!) sequences of text, and the “art objects” can be no less lengthy and complex … but no matter how extraordinary their results, these machines have no internal sense of “now” or “time.” Such concepts are completely orthogonal to them.

(This is, as you might imagine, one reason I continue find it difficult to take “AI doom,” “these are the first generation of machines that will imminently recursively self-improve and destroy us all,” etc., arguments particularly seriously, except as a testament to the enormous cultural power of implausible science fiction. But hey, that’s good, right?!)

Does their lack of memory make LLMs hard to model? Not at all! Entirely the opposite! Ironically enough we are now far enough into the computer revolution that people sometimes forget that this is how computers have always worked. We expect computer systems to retain data, to have memory, but, as this delightfully antediluvian University of Rhode Island web page reminds us, computers themselves have, since the days of vacuum tubes, been divided into CPUs — processors — and memory. (OK, fine, microprocessors have registers and caches, but I think you’ll concede that doesn’t count as meaningful memory.)

LLMs are the new CPUs. This isn’t a perfect metaphor — one doesn’t fine-tune CPUs, or use more powerful ones to ‘distill’ less powerful but more specialized ones — but it’s a remarkably effective one nonetheless. As Rohit Krishnan put it, even more incisively, LLMs are fuzzy processors.

It is famously (in the software world) said “There is no problem in computer science which cannot be solved by another abstraction layer.” Well, we have now created so many abstraction layers on top of our hardware that we have attained an abstraction which, ironically, looks a lot like the hardware.

Once we write binary code and fed it directly into the processor. (Fun fact: I actually did this, once, early in my career! Bits of test software for a Nortel Networks digital signal processing chip.) Then we wrote assembler. Now we write higher-level languages that are compiled, or interpreted, into the processor-specific machine code.

Similarly, we first just fed simple prompts into LLMs. Then the field of “prompt engineering” — the assembler of prompting — erupted. Now we use vector database searches, Langchain, Guardrails, and so forth to ‘compile’ prompts for LLMs. The parallels are pretty striking — as is how much faster this has happened.

(Classic computer models even have specific “alignment units” for memory, and often separate “arithmetic logic units,” which would be useful here too, since as we know LLMs can’t really do math…)

If this metaphor has predictive power, it suggests that the makers of “foundation models,” OpenAI and Anthropic et al, are more like chip-makers such as Intel and Motorola and AMD than they are like software behemoths such as Google and Microsoft. It suggests that the AI industry will partition into two largely separate fields, one which builds foundation models / fuzzy processors, one which writes and promulgates the software layers around them.

This seems likely — but not guaranteed. You’ll note that fine-tuning, again, doesn’t really fit into the metaphor, which is one reason I find it particularly interesting; that makes it seem like an escape route from a recapitulation of the same journey that microprocessors took. As such, the next post here will be a semi-deep dive into fine-tuning. Watch this space…

Okay, I'm switching sides. Call me a "techno-optimist" now. But you're the one that said that no one really understood what was going on under the hood of the LLM's any more. Have you reconsidered?