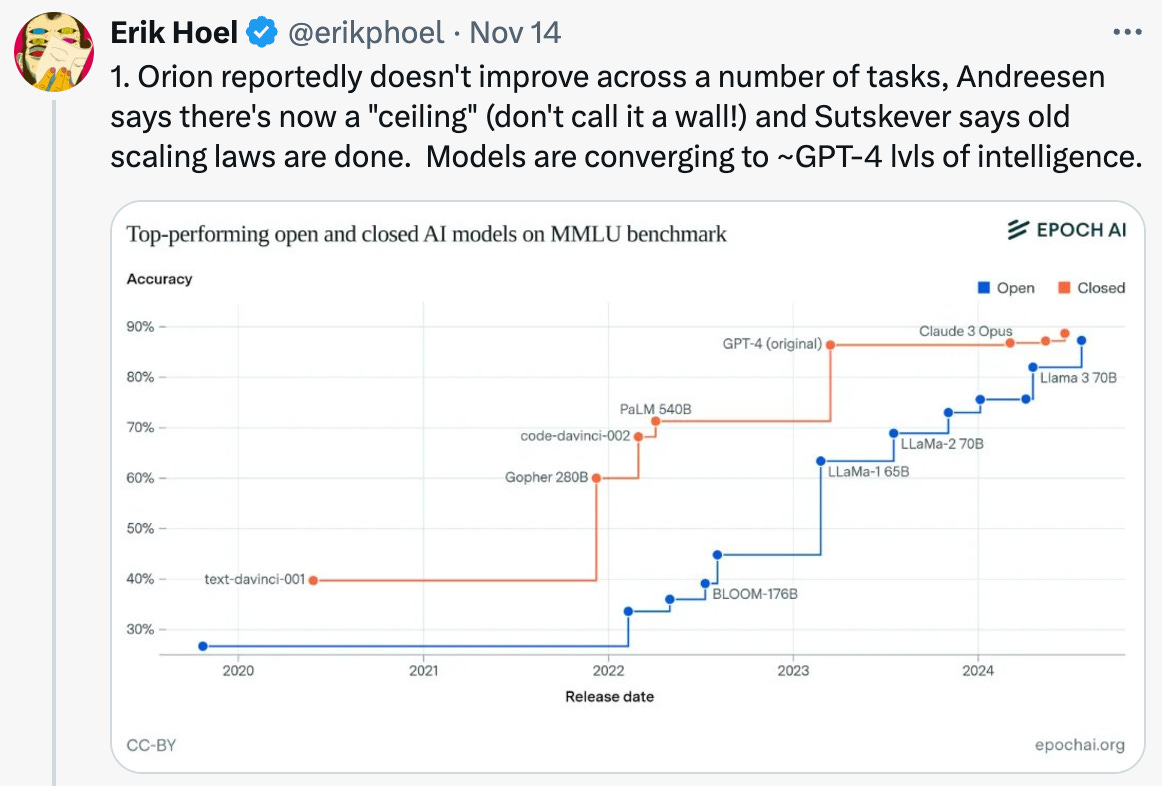

It’s unofficial: LLMs are hitting a wall.

This isn’t shocking. Some of us have been saying “This is an S-curve, not a takeoff” for almost two years. The week GPT-4 launched, the ‘community prediction’ for “When will the first weakly general AI system be devised, tested, and publicly announced?” at my former employer Metaculus dropped to October 2025 — less than a year from today! It has since receded to 2027 … and counting.

But what does it mean — for the frontier labs, for AI engineering, for AI policy? Well:

Labs

It’s very early days, the existence of a single slowdown is not dispositive, etc., but it seems at least possible there is only so much pure intelligence we can mine from the ore of human language, no matter how much of its articulation we can find or create.

This of course doesn’t mean AI itself will hit a wall. New architectures, new data sources, inference-time scaling, etc., are all promising new avenues. But we can’t expect qualitative leaps from inference-time scaling: as Erik Hoel puts it, “you can't search your way to AGI or superintelligence any more than I could search my way to a Theory of Everything by having 10,000 physics undergrads vote on proposals.”

All of which you have to admit it is … pretty awkward … if you are a money-losing frontier lab whose imputed value stemmed in part from the expected great strides along the road you were already traveling. Exploring new roads, without knowing which are dead ends, is another research model entirely. So: not great for OpenAI, Anthropic, Mistral, etc., unless they already have some kind of breakthrough new architecture(s) on tap internally. Probably neutral for DeepMind, which seems more architecturally experimental and is insulated by Google’s giant money fountain. And outright positive for those reportedly already working on whole new architectures, e.g. Ilya Sutskever & Daniel Gross’s SSI and John Carmack’s Keen.

Engineering

Whatever happens, it won’t be an AI winter. There will be no more AI winters. In AI winters, nobody except a few true believers cared about neural networks. But even if it now plateaus forever, generative AI is already, and will remain, an incredibly useful general-purpose cognitive tool, amanuensis, and anything-from-anything machine. (Albeit one that will continue to struggle with Moravec’s workplace paradox.)

In fact this slowdown is arguably good for the majority of today’s AI startups. A huge risk (or at least perceived risk) that many faced was the prospect of being entirely wiped out by the mythical tsunami of the Next Frontier Model, capable of doing by itself, out of the box, whatever a startup previously painstakingly built atop the Last Frontier Model. That was always unlikely but it made (a subset of) both customers and investors very hesitant. Much less so in the light of this looming wall.

Policy

This is going to be kind of embarrassing for the more distraught AI safetyists. Who will presumably embrace that embarrassment! …since they (must) want to be wrong. This extends to the “Situational Awareness” kinds of analyses. One thing I learned at Metaculus is that while extending continuous lines into the future is indeed an important part of quality prognostication, when you encounter a discontinuity in those lines, you probably need to update your forecast rather a lot.

Put another way, if you model AI risk as a stock, it is really very much a growth stock; you’re valuing future risk, not today’s, which is minimal. You may have noticed that when the growth rate of an early-stage tech company slows palpably, its stock falls hard. Similarly, when AI capability growth slows, as seems to be happening, your AI Risk ‘stock’—i.e. your anxiety about that risk—should plummet accordingly.

(I won’t name names, but in mid-2023 I attended a dinner of some Very Prominent AI Safetyists where we were asked about our expectations of LLM capabilities in 2026. If memory serves, and admittedly it may not, we were asked questions like “Will LLMs three years from now be able to create a de novo Internet worm from scratch / a standing start / a single set of prompts, and with it infect a sizable number of computers?” I was one of only two people, of the 25 or so in the room, who expressed any skepticism at all. 2026 is now only 14 months away…)

The flip side, from a policy perspective, is that the warts and foibles of models like today’s models are likely to be with us for some time. I suspect that in the near future policymakers will have to deal with population-level revealed preference for mass usage of today’s chatbots. Despite all the (100% correct) warnings about hallucinations and dangerous errors and how LLMs are inferior to human tutors and doctors and editors and therapists, people will use them en masse and continue to do so … because they get value out of them, and because professional humans are rare and busy and expensive while ChatGPT and Claude are right there, always, for $20/month. Flawed as they are, most of the time their answers are far better than no answers at all.